ShadowRay is an exposure of the Ray artificial intelligence (AI) framework infrastructure. This exposure is under active attack, yet Ray disputes that the exposure is a vulnerability and doesn’t intend to fix it. The dispute between Ray’s developers and security researchers highlights hidden assumptions and teaches lessons for AI security, internet-exposed assets, and vulnerability scanning through an understanding of ShadowRay.

Table of Contents

ShadowRay Explained

The AI compute platform Anyscale developed the open-source Ray AI framework, which is used mainly to manage AI workloads. The tool boasts a customer list that includes DoorDash, LinkedIn, Netflix, OpenAI, Uber, and many others.

The security researchers at Oligo Security discovered CVE-2023-48022, dubbed ShadowRay, which notes that Ray fails to apply authorization in the Jobs API. This exposure allows for any unauthenticated user with dashboard network access to launch jobs or even arbitrary code execution on the host.

The researchers calculate that this vulnerability should earn a 9.8 (out of 10) using the Common Vulnerability Scoring System (CVSS), yet Anyscale denies that the exposure is a vulnerability. Instead, they maintain that Ray is only intended to be used within a controlled environment and that the lack of authorization is an intended feature.

ShadowRay Damages

Unfortunately, a large number of customers don’t seem to understand Anyscale’s assumption that these environments won’t be exposed to the internet. Oligo already detected hundreds of exposed servers that attackers have already compromised and categorized the compromise types as:

- Accessed SSH keys: Enable attackers to connect to other virtual machines in the hosting environment, to gain persistence, and to repurpose computing capacity.

- Excessive access: Provides attackers with access to cloud environments via Ray root access or Kubernetes clusters because of embedded API administrator permissions.

- Compromised AI workloads: Affect the integrity of AI model results, allow for model theft, and potentially infect model training to alter future results.

- Hijacked compute: Repurposes expensive AI compute power for attackers’ needs, primarily cryptojacking, which mines for cryptocurrencies on stolen resources.

- Stolen credentials: Expose other resources to compromise through exposed passwords for OpenAI, Slack, Stripe, internal databases, AI databases, and more.

- Seized tokens: Allow attackers access to steal funds (Stripe), conduct AI supply chain attacks (OpenAI, Hugging Face, etc.), or intercept internal communication (Slack).

If you haven’t verified that internal Ray resources reside safely behind rigorous network security controls, run the Anyscale tools to locate exposed resources now.

ShadowRay Indirect Lessons

Although the direct damages will be significant for victims, ShadowRay exposes hidden network security assumptions overlooked in the mad dash to the cloud and for AI adoption. Let’s examine these assumptions in the context of AI security, internet exposed resources, and vulnerability scanning.

AI Security Lessons

In the rush to harness AI’s perceived power, companies put initiatives into the hands of AI experts who will naturally focus on their primary objective: to obtain AI model results. A natural myopia, but companies ignore three key hidden issues exposed by ShadowRay: AI experts lack security expertise, AI data needs encryption, and AI models need source tracking.

AI Experts Lack Security Expertise

Anyscale assumes the environment is secure just as AI researchers also assume Ray is secure. Neil Carpenter, Field CTO at Orca Security, notes, “If the endpoint isn’t going to be authenticated, it could at least have network controls to block access outside the immediate subnet by default. It’s disappointing that the authors are disputing this CVE as being by-design rather than working to address it.”

Anyscale’s response compared with Ray’s actual use highlights that AI experts don’t possess a security mindset. The hundreds of exposed servers indicate that many organizations need to add security to their AI teams or include security oversight in their operations. Those that continue to assume secure systems will suffer data compliance breaches and other damages.

AI Data Needs Encryption

Attackers easily detect and locate unencrypted sensitive information, especially the data Oligo researchers describe as the “models or datasets [that] are the unique, private intellectual property that differentiates a company from its competitors.”

The AI data becomes a single point of failure for data breaches and the exposure of company secrets, yet organizations budgeting millions on AI research neglect spending on the related security required to protect it. Fortunately, application layer encryption (ALE) and other types of current encryption solutions can be purchased to add protection to internal or external AI data modeling.

AI Models Need Source Tracking

As AI models digest information for modeling, AI programmers assume all data is good data and that ‘garbage-in-garbage-out’ will never apply to AI. Unfortunately, if attackers can insert false external data into a training data set, the model will be influenced, if not outright skewed. Yet AI data protection remains challenging.

“This is a rapidly evolving field,” admits Carpenter. “However … existing controls will help to protect against future attacks on AI training material; for example, the first lines of defense would include limiting access, both by identity and at the network layer, and auditing access to the data used to train the AI models. Securing a supply chain that includes AI training starts in the same way as securing any other software supply chain — a strong foundation.”

Traditional defenses help internal AI data sources but become exponentially more complicated when incorporating data sources outside of the organization. Carpenter suggests that third-party data requires additional consideration to avoid issues such as “malicious poisoning of the data, copyright infringement, and implicit bias.” Data scrubbing to avoid these issues will need to be done before adding the data to servers for AI model training.

Perhaps some researchers find all results as reasonable, even AI hallucinations. Yet fictionalized or corrupted results will mislead anyone attempting to apply the results in the real world. A healthy dose of cynicism needs to be applied to the process to motivate tracking the authenticity, validity, and appropriate use of AI-influencing data.

Internet Exposed Resources Lessons

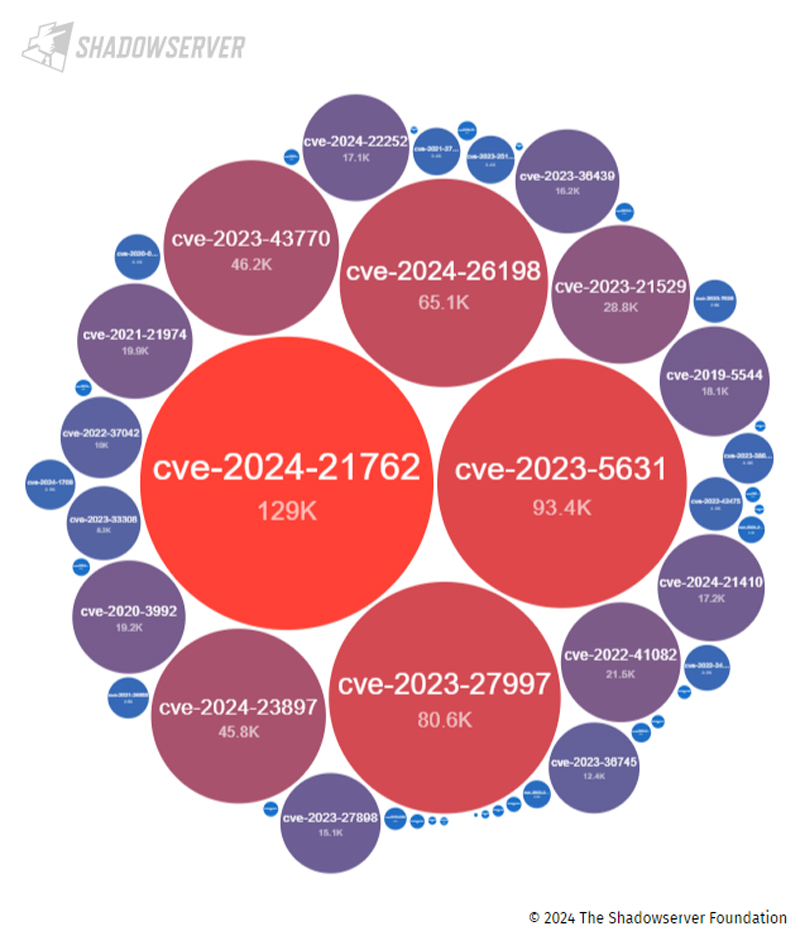

ShadowRay poses a problem because the AI teams exposed the infrastructure to public access. However, many others leave resources on the internet accessible with significant security vulnerabilities open to exploitation. For example, the image below depicts the hundreds of thousands of IP addresses with critical-level vulnerabilities that the Shadowserver Foundation detected accessible from the internet!

A search for all levels of vulnerabilities exposes millions of potential issues yet doesn’t even include a disputed CVE such as ShadowRay or other accidentally misconfigured and accessible infrastructure. Engaging a cloud-native application protection (CNAP) platform or even a cloud resource vulnerability scanner can help detect exposed vulnerabilities.

Unfortunately, scans require that AI development teams and others deploying resources submit their resources to security for tracking or scanning. AI teams likely launch resources independently for budgeting and rapid deployment purposes, but security teams must still be informed of their existence to apply cloud security best practices to the infrastructure.

Vulnerability Scanning Lessons

Anyscale’s dispute of CVE-2023-48022 puts the vulnerability into a gray zone along with the many other disputed CVE vulnerabilities. These range from issues that have yet to be proved and may not be valid to those where the product works as intended, just in an insecure fashion (such as ShadowRay).

These disputed vulnerabilities merit tracking either through a vulnerability management tool or risk management program. They also merit special attention because of two key lessons exposed by ShadowRay. First, vulnerability scanning tools vary in how they handle disputed vulnerabilities, and second, these vulnerabilities need active tracking and verification.

Watch for Variance in Disputed Vulnerability Handling

Different vulnerability scanners and threat feeds will handle disputed vulnerabilities differently. Some will omit disputed vulnerabilities, others might include them as optional scans, and others might include them as different types of issues.

For example, Carpenter reveals that “Orca took the approach of addressing this as a posture risk instead of a CVE-style vulnerability … This is a more consumable approach for organizations as a CVE would typically be addressed with an update (which won’t be available here) but a posture risk is addressed by a configuration change (which is the right approach for this problem).” IT teams need to actively track how a specific tool will handle a specific vulnerability.

Actively Track & Verify Vulnerabilities

Tools promise to make processes easier, but sadly, the easy button for security simply doesn’t exist yet. With regards to vulnerability scanners, it won’t be obvious if an existing tool scans for a specific vulnerability.

Security teams must actively follow which vulnerabilities may affect the IT environment and check to verify that the tool checks for specific CVEs of concern. For disputed vulnerabilities, additional steps may be needed such as filing a request with the vulnerability scanner support team to verify how the tool will or won’t address that specific vulnerability.

To further reduce the risk of exposure, use multiple vulnerability scanning tools and penetration tests to validate the potential risk of discovered vulnerabilities or to discover additional potential issues. In the case of ShadowRay, Anyscale provided one tool, but free open-source vulnerability scanning tools can also provide useful additional resources.

Bottom Line: Check & Recheck for Significant Vulnerabilities

You don’t have to be vulnerable to ShadowRay to appreciate the indirect lessons that the issue teaches about AI risks, internet-exposed assets, and vulnerability scanning. Actual consequences are painful, but continuous scanning for potential vulnerabilities on critical infrastructure can locate issues to resolve before an attacker can deliver damage.

Be aware of limitations for vulnerability scanning tools, AI modeling, and employees rushing to deploy cloud resources. Create mechanisms for teams to collaborate for better security and implement a system to continuously monitor for potential vulnerabilities through research, threat feeds, vulnerability scanners, and penetration testing.

For additional help in learning about potential threats, consider reading about threat intelligence feeds.