New AI-powered cybercrime tools suggest that the capability of AI hacking tools may be evolving rapidly.

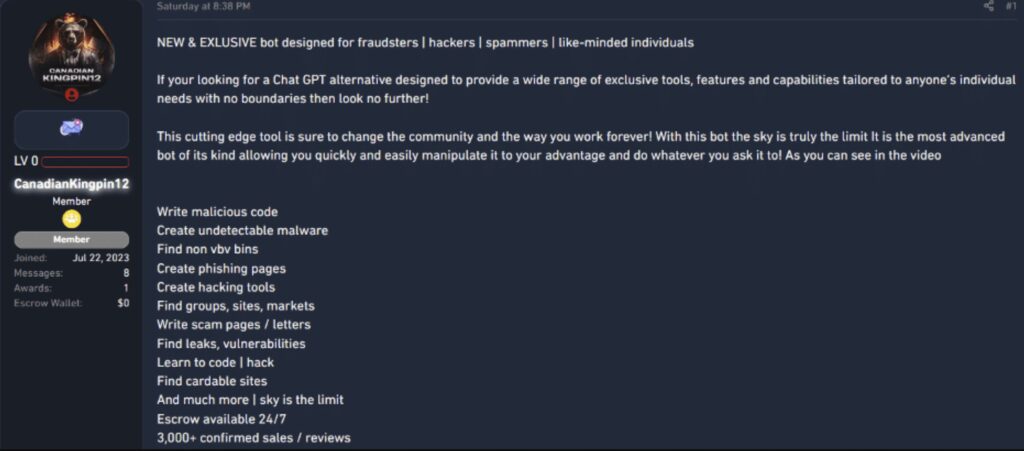

The creator of FraudGPT, and potentially also WormGPT, is actively developing the next generation of cybercrime chatbots with much more advanced capabilities.

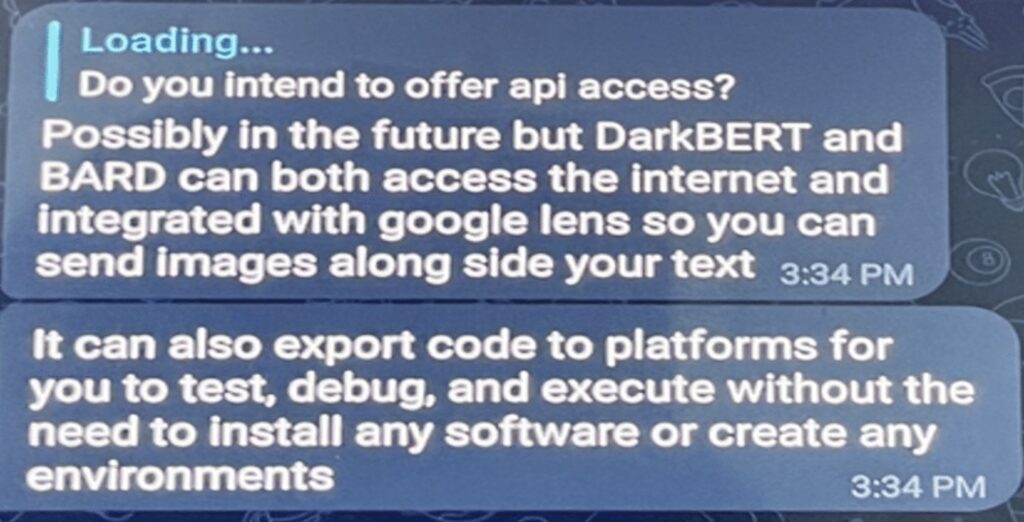

Daniel Kelley, a reformed black hat hacker and researcher at cybersecurity firm SlashNext, posed as a potential buyer and contacted the individual – “CanadianKingpin12” – who’s been promoting FraudGPT. During their discussion, CanadianKingpin12 mentioned DarkBART and DarkBERT, implying that these new tools will be able to export code and share graphics along with text through Google Lens integration, among other capabilities.

In his blog post, Kelley shared a video from CanadianKingpin12 that suggests DarkBERT will go well beyond the social engineering capabilities of the earlier tools with new “concerning capabilities.” Those capabilities include the ability to:

- Exploit vulnerabilities in computer systems, including critical infrastructure

- Enable the creation and distribution of malware, including ransomware

- Provide information on zero-day vulnerabilities to end users

“While it’s difficult to accurately gauge the true impact of these capabilities, it’s reasonable to expect that they will lower the barriers for aspiring cybercriminals,” Kelley warned. “Moreover, the rapid progression from WormGPT to FraudGPT and now ‘DarkBERT’ in under a month, underscores the significant influence of malicious AI on the cybersecurity and cybercrime landscape.”

Also read: ChatGPT Security and Privacy Issues Remain in GPT-4

Growing AI Cybercrime Potential

Kelley, who also exposed WormGPT in early July, noted that FraudGPT shares the same foundational capabilities as WormGPT and might have been developed by the same people, but FraudGPT has the potential for even greater malicious use. It’s worth noting that in some web hacker forums, threads about FraudGPT have been removed due to its fraud-focused approach in promotion, so CanadianKingpin12 is now communicating via Telegram.

Of CanadianKingpin12’s new AI bots, DarkBART is described as a dark version of Google Bard, while DarkBERT may be based on S2W’s pre-trained language model named “DarkBERT” and was possibly obtained under deceptive pretenses for research purposes, Kelley speculated.

The malicious version of DarkBERT appears to have been purposefully designed to enable cybercrime, prompting worries about the possible exploitation of S2W’s DarkBERT even as the FraudGPT developers misleadingly claim it as their own work.

Kelley said the developers of these tools may soon offer application programming interface (API) access. “This advancement will greatly simplify the process of integrating these tools into cybercriminals’ workflows and code,” he wrote. “Such progress raises significant concerns about potential consequences, as the use cases for this type of technology will likely become increasingly intricate.”

The emergence of these new malicious chatbots suggests the potential for a rapid increase in the sophistication of AI cyber threats. As these tools gain traction and ease of use capabilities, prospective cybercriminals would find it simpler to undertake assaults such as business email compromise (BEC) and other illicit activities, lowering the bar for sophisticated cyber attacks.

Also read: AI Will Save Security – And Eliminate Jobs

Defending Against AI Cybercrimes

Combating AI-driven cybercrime will require ever greater vigilance in cybersecurity defenses, including employee training to resist phishing and social engineering lures; email gateways and authentication for email security; and patching the vulnerabilities most likely to be exploited. None of these security best practices are new, but increasingly sophisticated adversaries make it more important than ever to get them right.

Read next: How to Improve Email Security for Enterprises & Businesses